Fast and accurate numerical differences in R

GitHub repository: https://github.com/Fifis/pnd

PDF of a presentation of the topic at the University of Luxembourg.

In the past, I needed to compute numerical gradients, and I had been using numDeriv for this task, thinking naïvely that computers can calculate these objects just like they calculate the value of the sine at any point or any digit of pi. Oh, I could not be further away from the truth. Different step sizes yielded different results. Small step sizes were sometimes better, sometimes worse. I did not know what to do.

And then, I started searching. The first reliable source on the subject was Timothy Sauer’s ‘Numerical analysis’ (2017, 3rd ed.). He shows how there is an error in derivative calculations because in the limit,

\[ \lim_{h\to 0} \frac{f(x+h) - f(x)}{h},\]

h is never zero, but computers cannot make accurate small steps.

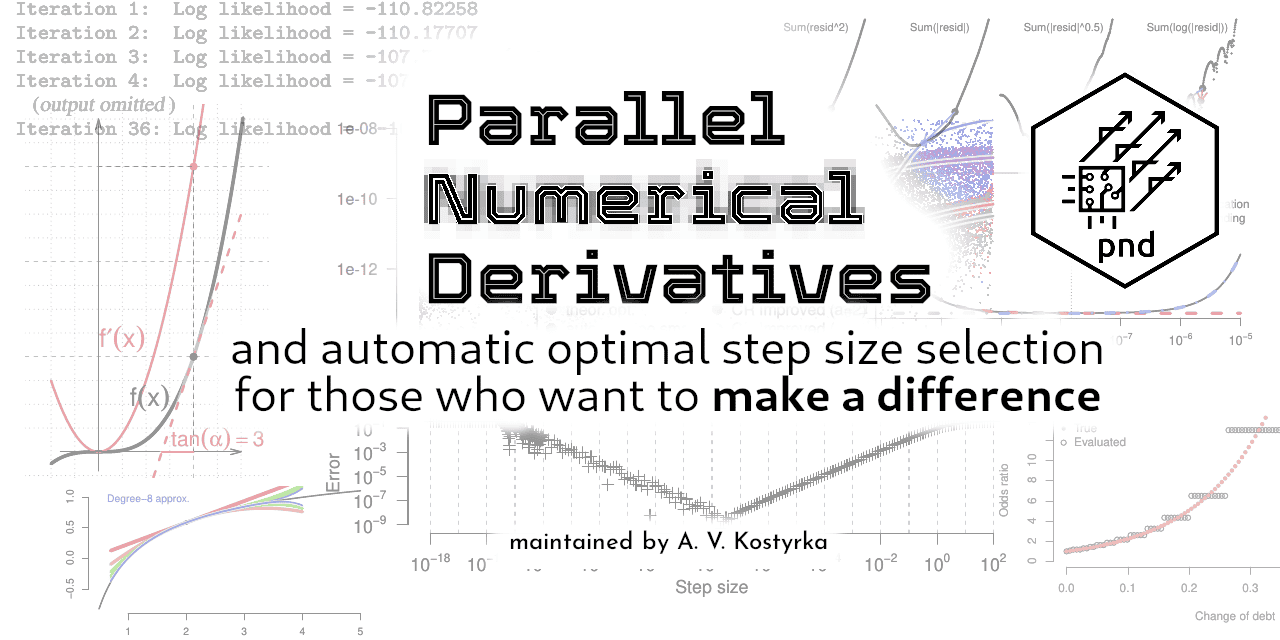

After more than one year of development later, I am happy to present a new R package: pnd for parallel numerical derivative, gradients, Jacobians, and Hessians. Critique, suggestions, bug reports and any form of feedback are welcome!